How Passive Liveness Detection Fights Deepfake Attacks

Liveness detection helps to confirm whether the source of a biometric sample comes from a fake representation or is a live human face. During a video call, this technology can even find the difference between a moving video source coming from a third device and the live video of a person sitting at the other end. However, hackers can still trick and spoof biometric authentication systems, including voice and facial recognition, to bypass some of the most advanced company networks using deepfakes - 550% increase between 2019 and 2023! Due to AI advancement, a staggering number of increases in deepfakes has led to its continuous growth and fight against the best liveness detection systems. As a result, a continuous fight has since been ongoing between the quality of deepfakes and liveness detection technologies.

What is Passive Liveness Detection?

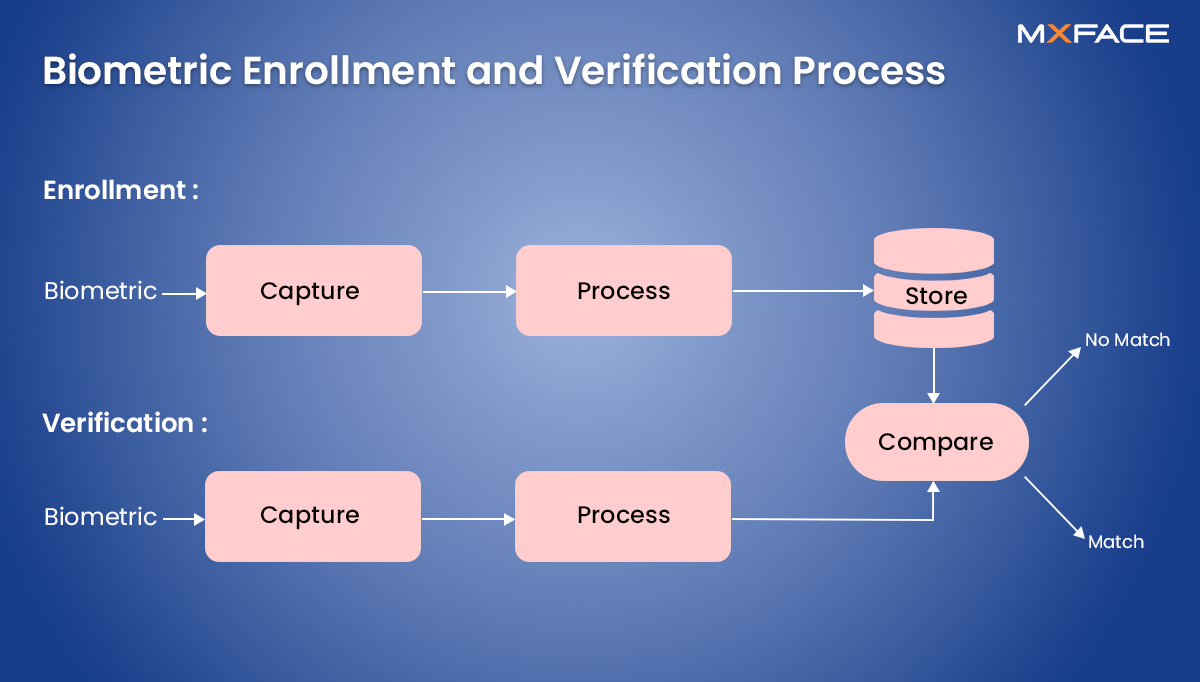

In 1950, Alan Turing famously developed the "Turing Test" to answer the age-old philosophical question of whether machines can “think” by testing their ability to mimic human conversations. They store information as ones and zeros in a “tape” of memory cells. The idea was to reduce the process of calculation to its most basic abstraction but led to one of the most significant breakthroughs in the modern computational era. Interestingly, when we started to use our faces as biometrics to hold critical information, it became essential to use advanced algorithms to help identify inconsistencies. It all goes way back to the evaluator not being able to recognize a human from a machine. In other words, passive liveness detection is a process that helps distinguish live human beings from fake ones. It also has an “active” counterpart, which is also a way to verify whether the source of a biometric sample (fingerprint, iris or face) comes from a human or not. It is important to understand that in both cases, liveness is detected by prompting a user to perform a simple task, which includes simple movement in one’s head. If there is no movement, liveness would not be detected, which is another way of saying that a deepfake attack cannot begin with a static image using fake biometric data.

Face Distortion in Deepfakes

Modern algorithms work in a variety of lighting conditions because they are trained to do so even across large age gaps. Naturally, it is more important to understand how a face may change over the years. And if not naturally, their are synthetic methods to change the appearance of a face to move off the grid. It is very crucial for biometric systems to work against both long- and short-term changes of appearance. These systems can verify and authenticate based on the info in their database but can’t raise an alarm when a change in an old face has come with new features. This is where “liveness detection” systems work. Furthermore, multi-modal biometric authentication combines comparison and liveness to detect deepfakes across many formats. In simple words, it uses multiple biometric traits simultaneously to verify identity and detect spoofing attempts. One simple selfie can be used to detect digital manipulation and to determine the liveness of the subject. Additionally, with computer vision technologies and deep learning algorithms with face detection at its core, passive face liveness detection is able to overcome many challenges. When a system asks the user to rotate the head in a specific way or asks the user to smile, frown, or raise an eyebrow, the algorithm analyzes if the actions were completed successfully or not to verify the “liveness” of the person, making it incredibly hard for deepfakes to further propagate the system.

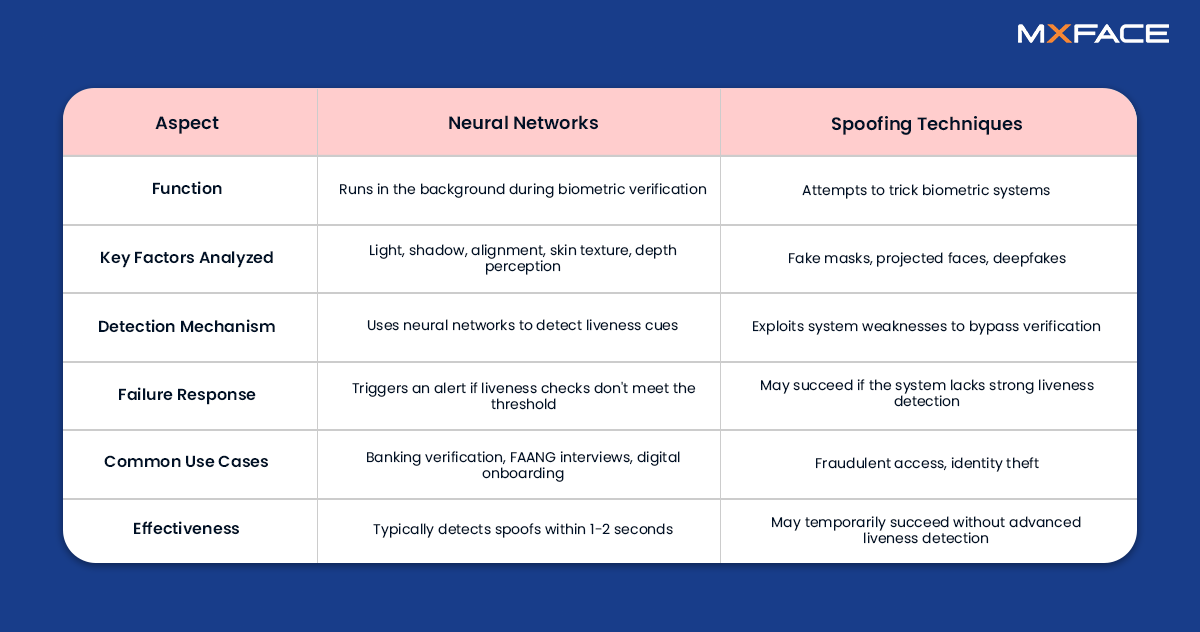

Neural Networks vs. Spoofers

The Science of Depth-Based Liveness Detection

Passive liveness check uses computational characteristics for authentication. Since machine learning's emergence, various schemes have been explored: texture analysis, depth mapping, infrared imaging. Facial recognition has spread from high-security borders to everyday authentication systems. But every system has its vulnerabilities. The most important thing about visual research is the integrity of the relationship between the researcher and the subject. So-called limitations are simply empty spaces between understanding and discovery. Liveness detection process, especially passive liveness, which runs in the background, includes an extensive set of morps and anthropometry coefficients. Combine with machine learning, these coefficients create a 3D model that is able to detect micro-movements with subpixel accuracy in real time. In other words, passive liveness boosts the facial authentication system to:

- Recognize facial expressions

- Estimate head and face position

- Track eye pupil movement

- Detect color changes on the skin

- Measure heart rate

- Analyze a single image for verification

At this point, it is important to understand that a liveness detection technology is not a suddenly discovered thing, but it has become more sophisticated over the years because of the continuously growing technological advancements. For instance, the use of openCV, or open source computer vision library is crucial to build a robust dataset containing millions of real and spoof facial images for liveness detection.

A computer needs to know what it is fighting against by giving instructions of what is right and wrong. That’s why this open-source software library plays a crucial role in the advancement of face liveness detection technology by helping computers apply anti-face spoofing algorithms into facial recognition applications.

Liveness Detection with Advanced Computer Vision

What advanced computer vision has done to liveness detection over the years is incomparable to the suite of features it already has before. From spotting a fake face in milliseconds across thousands of images in a day that too by noticing the unnatural lighting or on a rigid stillness of a recorded video to adapting to newer standards by high-resolution masks, passive live detection has already caught up.

1. Texture Analysis

A fake face might look convincing at a glance, but zoom in, and the truth unravels. Paper printouts, even high-quality ones, lack the microscopic irregularities of human skin. A screen displaying a face can’t recreate the depth and pores of real flesh. Liveness detection for face recognition exploits this, using high-resolution imaging and frequency analysis to catch forgeries in the fine details.

2. Optical Flow

Real faces don’t stay perfectly still. Tiny muscle shifts, involuntary micro-movements, and natural light refraction separate the living from the lifeless. A photo held up to a camera remains rigid, but a real face breathes, twitches, and subtly changes. Optical flow tracking captures this, analyzing motion vectors to ensure what’s on the screen isn’t just a clever trick.

2. 3D Depth Mapping

A 2D image lacks dimension, but a real face doesn’t. Depth sensors, structured light, and stereo vision techniques measure the contours of a face, verifying that it isn’t just a flat surface pretending to be human. Some systems use infrared imaging, detecting heat signatures that a printed photo can’t replicate.

Fight Against Advanced Deepfake Attacks

Every technological defense matters and is somehow connected. This is no abstraction. A security theorist might say: "Each authentication method is all the protections it has not counted: subtract us into bare pixels and binary again, and you shall see begin in ancient biometric research the insights that ended yesterday in deepfake prevention." (A security system fails when its researchers cease to believe that their own observations are important and that the data of the stranger's potential attack is just as important. For that is to fall into fantasy: All our defensive strategies connect.) It is important to tell our technological defense stories, how we got from vulnerable facial recognition to intelligent liveness detection, to give our inheritors maps. (A few algorithms in one decade can become an entire field of cybersecurity a century later. That's not idealism; that's progress.) Understanding face recognition liveness detection is difficult, but not special. Across technological ages, all sorts have pursued it, many with no more formal training than curiosity and determination. A good neural network, a clean, well-calibrated algorithm, the training data, the verification models. But after the model is ready, you have to test it. For a very long time. There's no harm or blame in not having a perfect detection rate. The engineers who design these systems don't usually tell you about this because they're not living in the nuanced world of authentication.

MxFace Liveness Detection SDK

Spoofing attacks will get smarter. AI-generated deepfakes, hyper-realistic masks, and advanced projection techniques are already testing the limits. But passive liveness detection is evolving just as fast. Neural networks trained on millions of real and fake samples stored inside state-of-the-art software development kits. Due to this, the MxFace liveness detection SDK is getting better at spotting the fakes. Multi-modal approaches, combining texture, depth, and motion analysis, ensure that no single trick can bypass security. But in a world where everything from unlocking a phone to accessing a bank relies on face authentication, one thing is clear: the future belongs to those who can prove they’re real, and that too without even trying.

Comments