Age and Gender Recognition using Deep Learning in OpenCV

Table of Contents

- 1. What are Convolutional Neural Networks (CNNs)

-

2. Optimizing Age and Gender Recognition Models

- 1. Start with What You Already Have

- 2. Determine Your Actual Needs

- 3. Balancing Traditional and Modern Approaches

- 4. Evaluate Your System Requirements

-

3. Trends in Efficient Architecture Design

- 1. Smaller Parameter Models

- 2. Evaluating Cost-Effectiveness

- 3. Hardware Considerations

- 4. Focus on What Matters for Age and Gender Recognition

-

5. FAQs

- 1. How to Remove Gender Bias in Machine Learning Models?

- 2. How Does AI Recognize Gender by the Face Alone?

- 3. Which is Better Between PCA and CNN for Facial Recognition?

- 4. How Does the Facial Recognition API Work?

- 5. Why is Face Detection More Difficult Than Generic Detection?

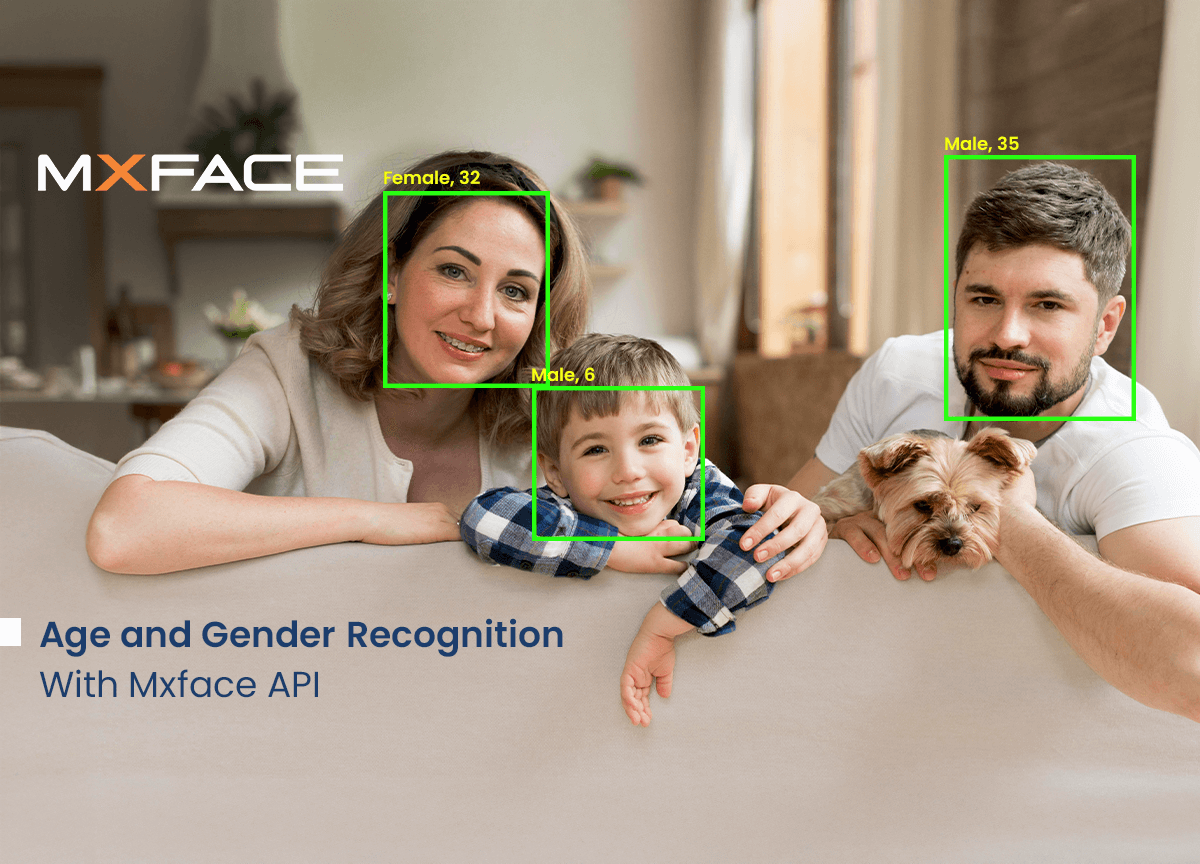

The average person does not relish the complexity of computer vision, and this is mostly because age and gender recognition from facial features isn't just a cool, technical analysis of pixels and input data points. Facial analysis lives at the intersection of pattern recognition and deeply personal identification. Age and gender recognition are doubly so.

Before you even begin tackling age and gender recognition, it's important to acknowledge that both facial analysis and personal identity are sensitive, and at times, you may feel uncomfortable or even concerned about the ethical implications of such technology. That's okay.

From there, consider reframing what age and gender recognition means to you.

What are Convolutional Neural Networks (CNNs)?

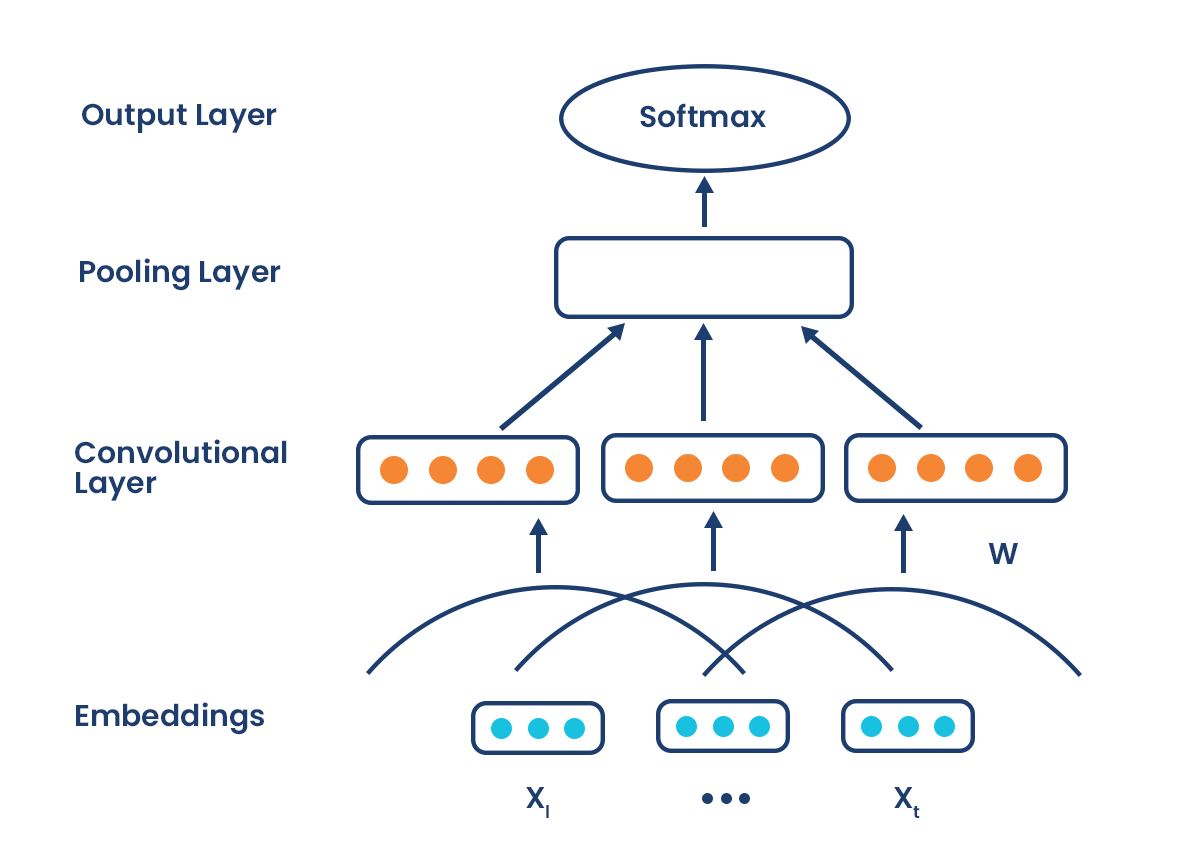

A convolutional neural network (CNN) is a snapshot of just one approach in your toolkit for how much data is coming in and what you intend to derive from it. CNNs employ convolutional layers to extract features, making them highly effective in classification tasks like facial recognition. If you're not using CNNs, it's like building a recognition system without ever testing accuracy.

It's important to acknowledge that both facial analysis and neural network architecture are complex, and at times, you may feel frustrated, overwhelmed, or even discouraged by the intricacies involved. One strategy to calculate your baseline model performance is to look at your training and validation results over several iterations to get your accuracy average.

Optimizing Age and Gender Recognition Models

1. Start with What You Already Have

Before you even pack your GPU memory to implement a new deep neural network, consider examining your existing code first. This tweak in mindset can help you plan your architecture much more effectively, according to researchers who started their optimization-focused learning frameworks during the early days of deep learning.

Most developers already have something neglected in their repositories or stashed in experimental branches. By looking there at the outset, you can build your models based on what you've already invested your time in. If it turns out to be something that doesn't perform well, it teaches you how to design better and reforms your development habits going forward.

2. Determine Your Actual Needs

Once you know what you have in your toolkit, the next step is deciding what you actually need. It can be tempting to focus on specific deep learning algorithms that are trendy. But it can be more helpful to think of the big picture or your complete system. Researchers recommend mapping out five to ten key features that your system must detect.

However, if those approaches don't capture the nuances inherently, your system will fail. From there, you can begin to design your neural network architecture and identify where some of the more obvious optimizations are.

3. Balancing Traditional and Modern Approaches

One thing that's consistent is that using CNNs and focusing on convolutional layers is going to stretch your computational resources. If you really love traditional approaches, you can also consider ways to integrate them. Say you really do want your handcrafted features. You can make a hybrid system with half CNN and half traditional features, so that's the best of both worlds.

It's more computationally efficient, provides a variety of feature representations, and you get the accuracy that you prefer. Transfer learning can also be an easy optimization. As long as you're getting the feature extraction somewhere and the patterns somewhere, pre-trained models are a great option that doesn't require training from scratch.

4. Evaluate Your System Requirements

Just like evaluating your knowledge base, it's important to evaluate your system requirements too. Many artificial neural networks solve the exact same problems, but with vastly different computational resources needed. Be sure to compare performance across different frameworks too.

For their specific application, they found that other frameworks offered better performance for the models they regularly used. You don't even have to leave your chair to do this now because they all have their benchmarks online. Within an hour, you could have a really clear idea of what framework is going to get you the biggest bang for your buck.

Related: How Passive Liveness Detection Fights Deepfake Attacks

Trends in Efficient Architecture Design

1. Smaller Parameter Models

In an analysis of nearly 100 commonly used CNN architectures, a third have shrunk in parameter size since the deep learning boom, according to research firms, with lightweight models, efficient networks, and mobile-friendly architectures as the leading trend. On GitHub, communities are home to more than 160,000 people tracking ever-smaller networks, increasingly efficient frameworks, and resource-conscious implementations.

2. Evaluating Cost-Effectiveness

To get a sense of a model's cost-effectiveness, take a look at the computation per parameter or how much processing you need by weight. It's typically listed in benchmarking reports. You may find that your favorite architectures are much pricier than simpler models. And make sure to check approaches that aren't just the most popular, which are typically more computationally expensive, premium networks.

3. Hardware Considerations

You may assume that opting for a high-end GPU is the obvious workaround to computational limitations, but make sure to do your homework before committing to expensive hardware. Researchers have found that when checking the performance per watt, specialized hardware can be more expensive than optimized software on standard platforms. However, for some applications, dedicated hardware may still be worth it, especially if you have larger datasets or are processing multiple facesfor age and gender recognition simultaneously.

Focus on What Matters for Age and Gender Recognition

Think about the features that really matter to your age and gender recognition application, like MxFace, and build those into your architecture because I would rather have that in my intentional planning than end up impulse-adding a feature that's more computationally expensive in the long run.

FAQs

1. How to Remove Gender Bias in Machine Learning Models?

You have to train the model by feeding it balanced and diverse data so that it doesn’t favor that gender. Therefore, it is important to test your model regularly as soon as it gives out biased results. If there is bias, retrain it with better data or by fine-tuning with more balanced and inclusive data.

2. How Does AI Recognize Gender by the Face Alone?

AI looks at details like the shape of the jawline, the distance between the eyes, and millions of other things to complete the scanning of facial features. Here, convolutional neural networks process these features and assign them to a gender category based on the data it has with features commonly associated with male and female faces.

3. Which is Better Between PCA and CNN for Facial Recognition?

CNN is better because it detects multiple layers of patterns, whereas PCA (Principal Component Analysis) reduces the size of the data and looks for basic patterns. Therefore, the output of CNN is way more accurate for facial recognition and handles real-world challenges like different angles, lighting, and expressions.

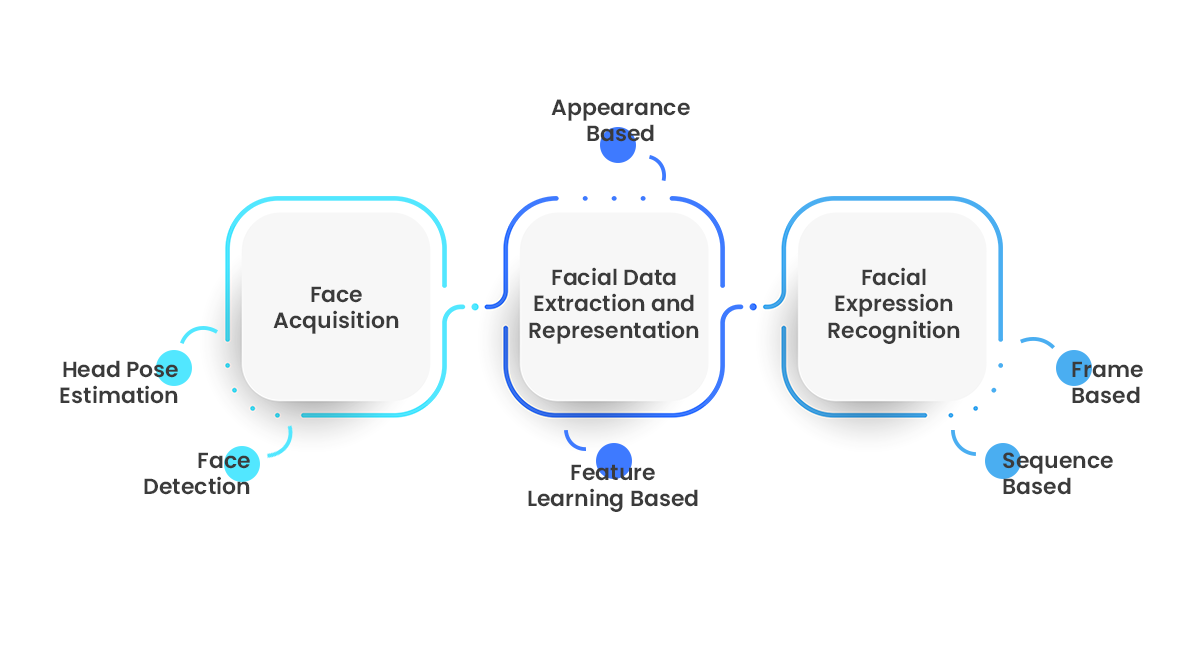

4. How Does the Facial Recognition API Work?

A Facial Recognition API analyzes the distance between the eyes, the size of the nose, and the shape of the jawline and converts them into a series of numbers that the computer can understand and compare with stored data. The API checks if these numbers match any known faces in its database.

5. Why is Face Detection More Difficult Than Generic Detection?

Because faces can change a lot. People move their heads in different directions; lighting can also affect the face; sometimes, faces are partially covered by objects. Also, busy backgrounds can confuse the system, making it difficult to focus on the face.

Comments